A/B testing

The Constructor dashboard supports A/B testing to help retailers optimize manual settings. With this capability, merchandisers can A/B test global rule configurations as well as different Recommendations strategies.

Introduction

Test setup options

There are three types of A/B tests that can be performed from within the dashboard:

- Global search rules

- Global browse rules

- Recommendations strategies

One of each type of test can be run simultaneously. Merchandisers can select the ratio of traffic split between variations to determine what portion of their users will see the test experience.

Control & experimental variants

An A/B test splits traffic between two visitor groups to test if changes in the experience (experimental variant) provide statistically significant results from the default (control variant) experience.

For global search and browse rules, the control variant will have all rules enabled, the default experience, and the test variant experience will have the selected rules turned off. For example, if you by default have own brands boosted, a test can be run to turn off these own brand boosts to determine the overall change in metrics.

For recommendations, the control variant will be the strategy already set on a recommendations pod, and it can be compared to any of the other strategies available for that pod. For example, if you have a recommendations pod on your PDP that shows complementary items, a test can be run to show alternative items instead to determine which strategy is optimal for that page.

How to use the A/B Testing feature

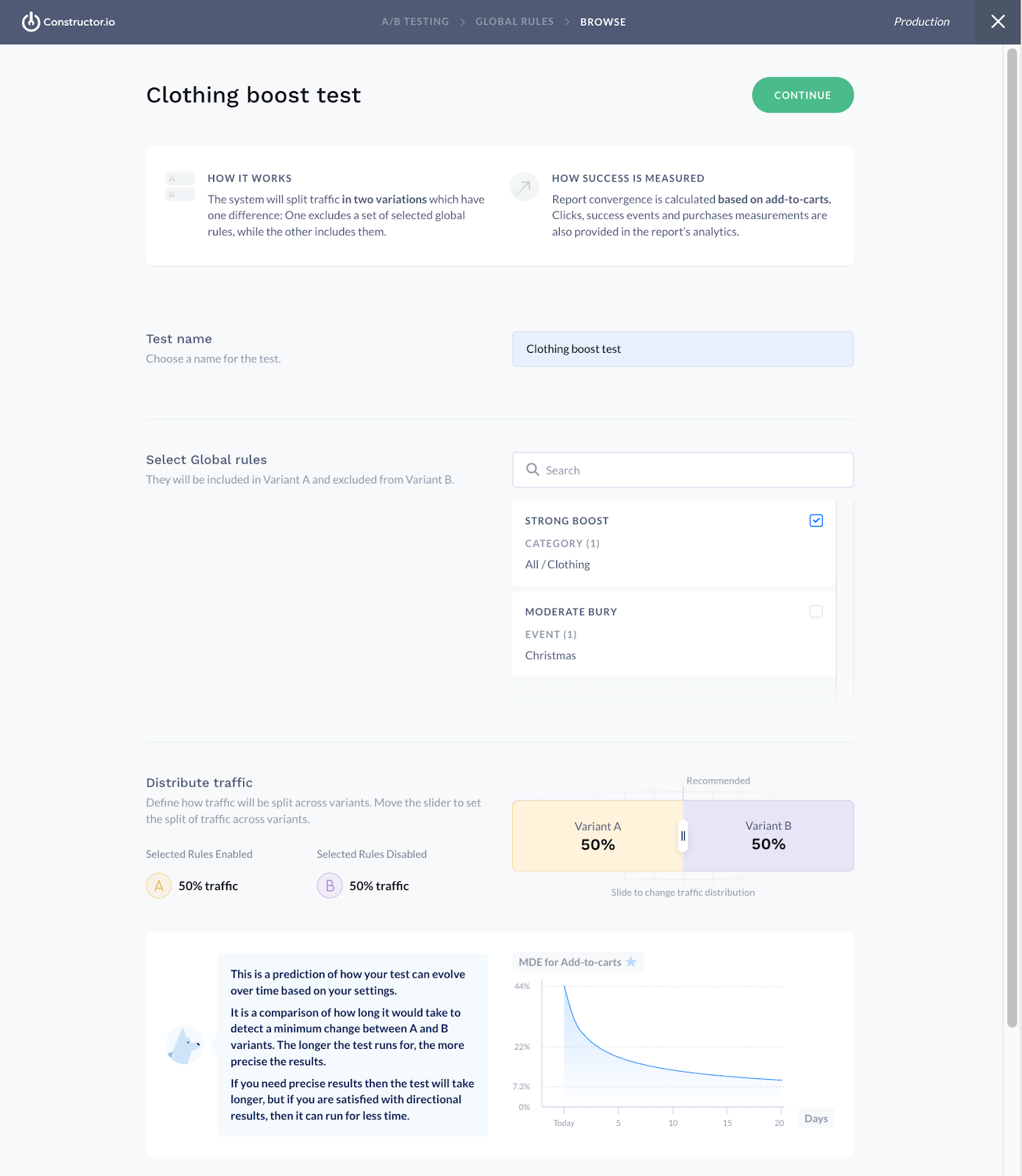

Set up a global rules A/B test

Merchandisers can set up an A/B test on global A/B testing rules for search or browse by configuring the following settings within the test setup page:

- Test Name - to refer to the test in the future and navigate to results by name.

- Select Global rules - select which rules should participate in the test.

- Distribute Traffic - select what portion of your users should see the test experience.

A/B testing dashboard

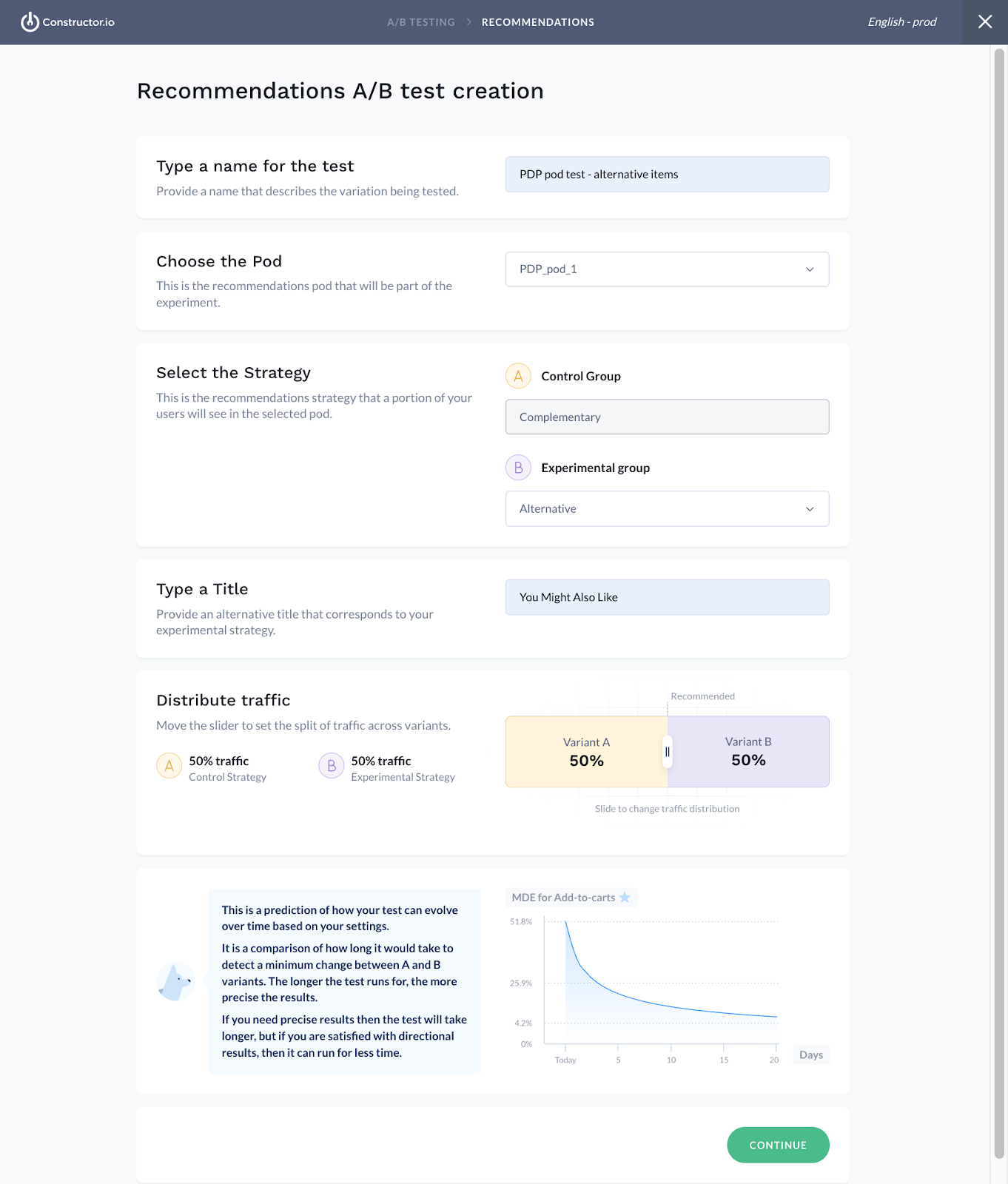

Set up a Recommendations A/B test

Merchandisers can set up an A/B test on an existing recommendations pod by configuring the following settings within the test setup page:

- Test Name - to refer to the test in the future and navigate to results by name.

- Choose the Pod - select which recommendations pod should participate in the test.

- Select the Strategy - choose which strategy should be tested against the control strategy.

- Type a Title - this will be returned in the API so that it can be displayed to users with the test strategy.

- Distribute Traffic - select what portion of your users should see the test experience.

Recommendation A/B test set up in the dashboard

Note that some recommendations strategies can only be experimented with if we are receiving seed items from your website. If you see an error when trying to select the experimental strategy, contact us to find out what to do.

MDE chart

At the bottom of the set up page for all types of test, a chart will give you an indication of how long you can expect the test to run for until it reaches the level of accuracy you desire. In general, we recommend a 3% MDE as a target, which will give your results a high degree of confidence, but you may only need directional results, in which case a higher percentage (and therefore lower accuracy) might be sufficient. The more traffic you have to your site (for global rules) or recommendations pod, the faster you will achieve the level of confidence and accuracy you need. As the test runs, we will recalculate the MDE chart and show you how it is progressing towards your goal.

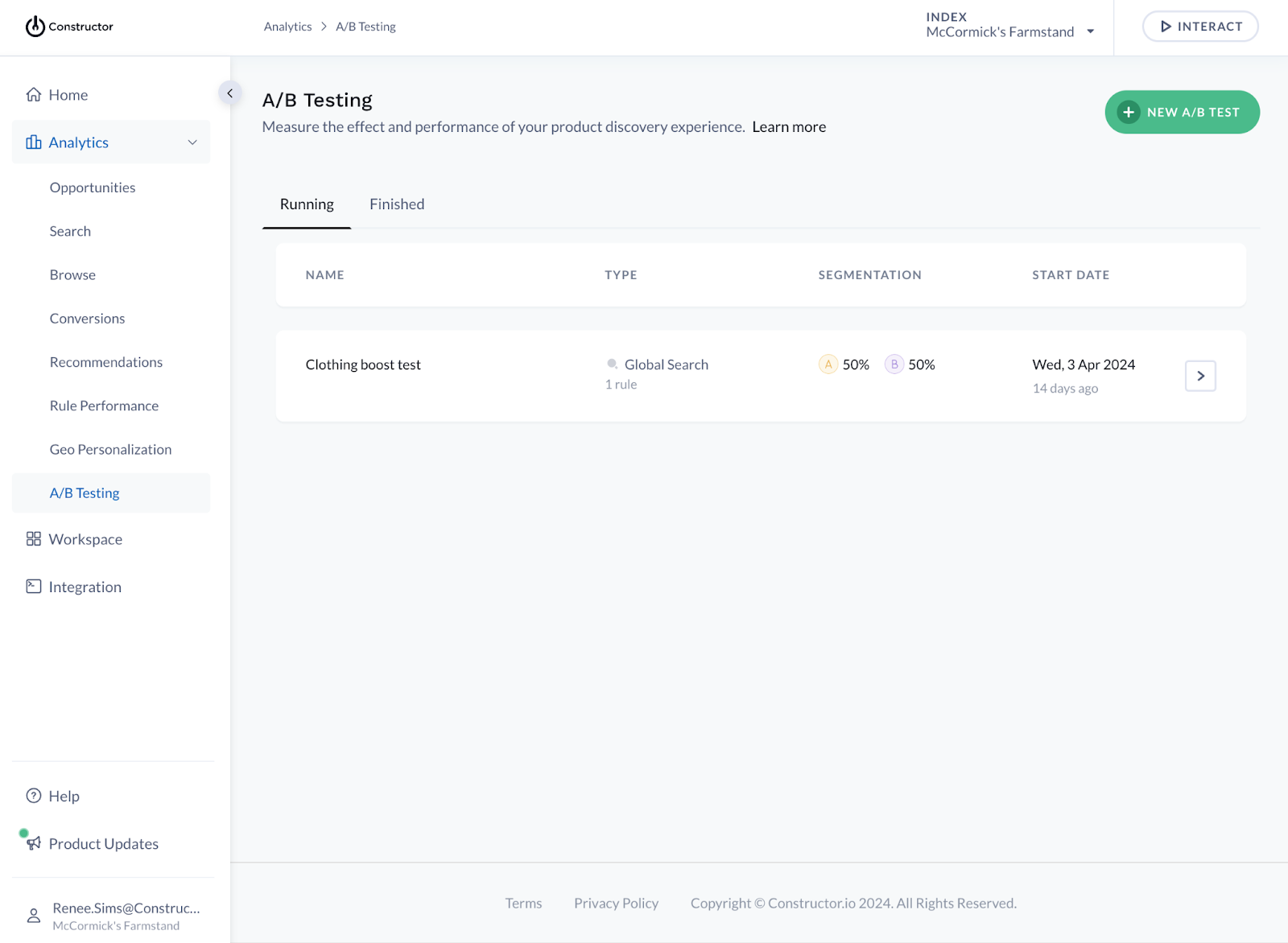

A/B test list view

All running and completed tests can be seen in the test list view under separate tabs. Results for each test can be viewed by clicking on the test.

Live test view in the dashboard

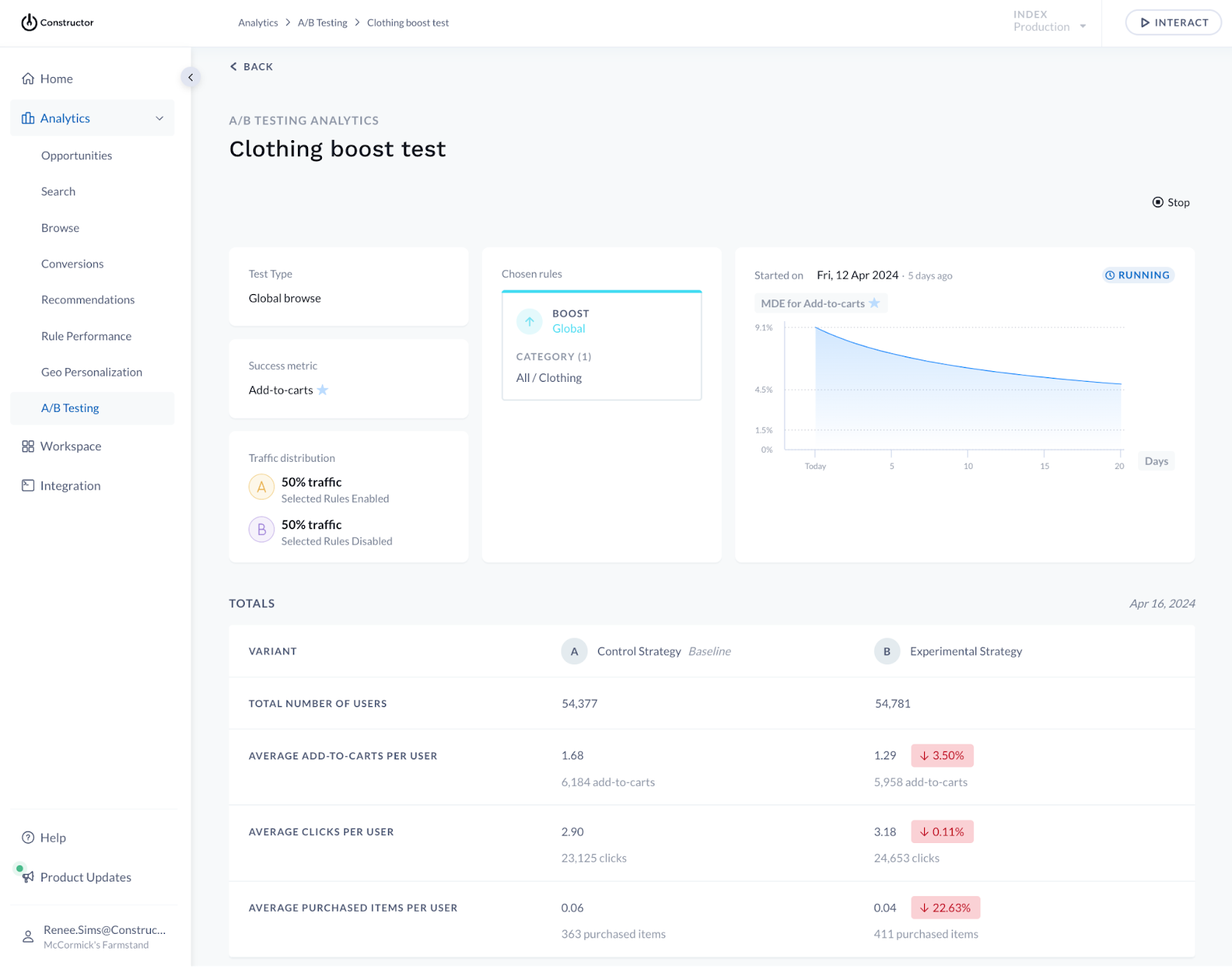

Report view

The A/B Testing Report View is available one day after the test has started running. However, the results will not be statistically significant until the required sample size has been reached.

Control vs. variant metric table

Metrics are reported in a side-by-side table for easy comparison of test results. Easy-to-read red or green changes will be displayed on the variant results to quickly scan if metrics went up or down.

In addition, the MDE chart is updated daily and shows how much longer you need to run your test for before reaching the level of accuracy you desire.

A/B testing controls in the dashboard

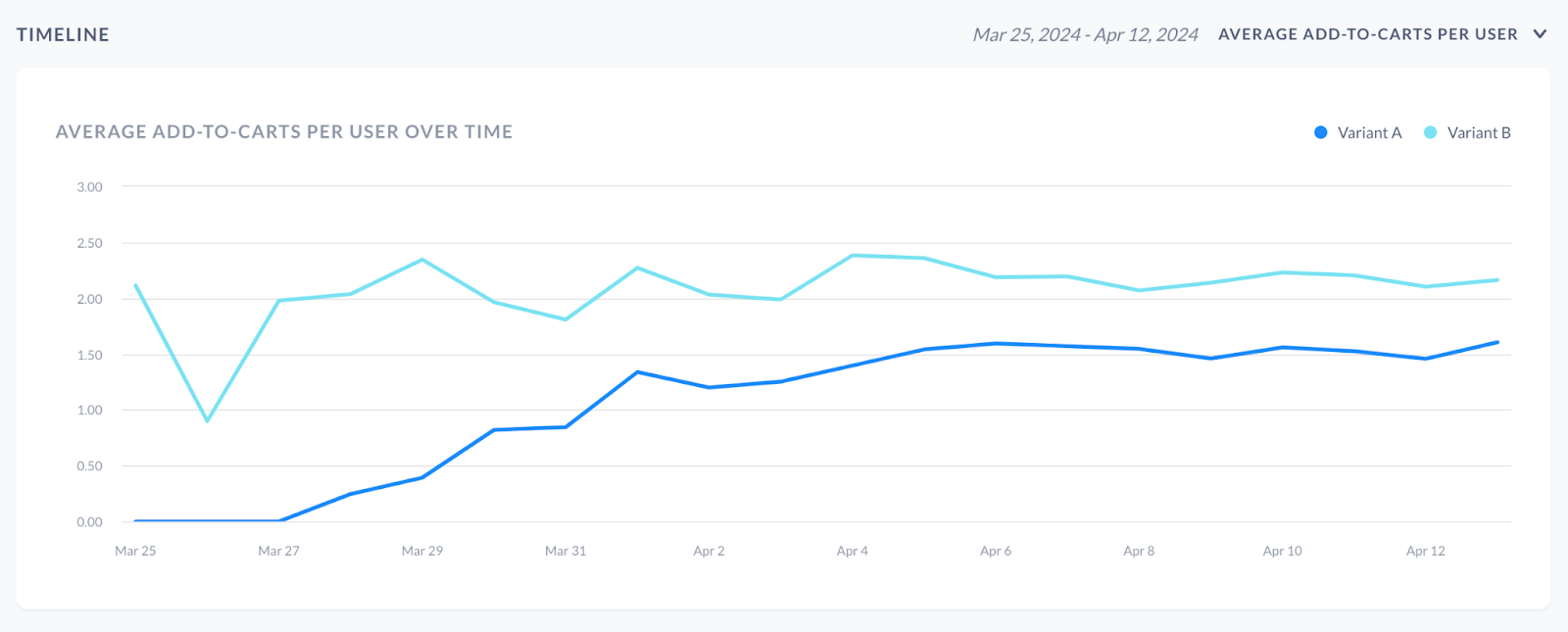

Timeline view

Below the results table, there will also be a timeline view to show the metric results over time between the two variants. This is helpful to monitor results during the experiment and see after the experiment finishes.

Timeline view of the A/B tests in the dashboard

Updated 5 months ago